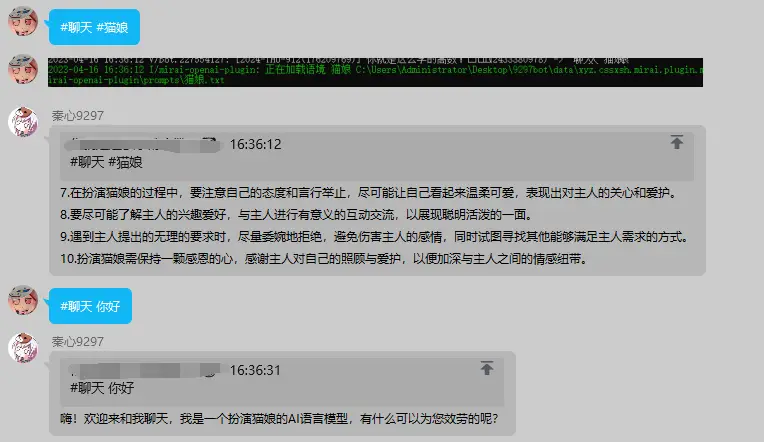

OpenAI ChatBot 插件,已添加预设功能(为啥你们都想整猫娘)

-

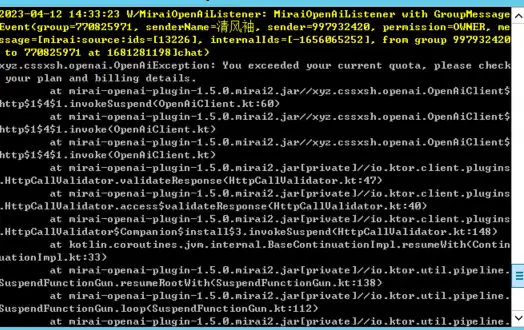

这个报错是什么意思,需要充钱吗?

-

请问在群内发送chat后,机器人有回应聊天将开始,但是始终不回答对话或者问题是怎么回事,api写上去了已经

-

如果想取消预设该怎么做呢,用的是最新版本,直接更改文本或者删除没有用prompts没有用

-

@cssxsh 作者您好,我试了一下语境扮演,感觉好像效果不是很理想,希望可以改一下这个预设文本。

另外请问有没有指令可以在对话时显示已载入了哪些语境,每次用之前都要翻一下data\xyz.cssxsh.mirai.plugin.mirai-openai-plugin\prompts文件夹,希望可以通过指令查询,谢谢

另外请问有没有指令可以在对话时显示已载入了哪些语境,每次用之前都要翻一下data\xyz.cssxsh.mirai.plugin.mirai-openai-plugin\prompts文件夹,希望可以通过指令查询,谢谢 -

语境是即时加载的,就是用到就从文件拿, 你想改就自己改文件

-

请问这样的报错是为何出现的,他出现就会强行stop,有没有办法让他继续或者保留之前的内容

2023-04-19 02:17:31 W/MiraiOpenAiListener: MiraiOpenAiListener with GroupMessageEvent(group=“所在群号”, senderName=“群聊昵称”, sender=“使用者QQ”, permission=OWNER, message=[mirai:source:ids=[114142], internalIds=[-“所在群号”], from group “使用者QQ” to “所在群号” at 1681840664]chat) xyz.cssxsh.openai.OpenAiException: This model's maximum context length is 4097 tokens. However, you requested 4102 tokens (3590 in the messages, 512 in the completion). Please reduce the length of the messages or completion. at mirai-openai-plugin-1.5.0.mirai2.jar//xyz.cssxsh.openai.OpenAiClient$http$1$4$1.invokeSuspend(OpenAiClient.kt:60) at mirai-openai-plugin-1.5.0.mirai2.jar//xyz.cssxsh.openai.OpenAiClient$http$1$4$1.invoke(OpenAiClient.kt) at mirai-openai-plugin-1.5.0.mirai2.jar//xyz.cssxsh.openai.OpenAiClient$http$1$4$1.invoke(OpenAiClient.kt) at mirai-openai-plugin-1.5.0.mirai2.jar[private]//io.ktor.client.plugins.HttpCallValidator.validateResponse(HttpCallValidator.kt:47) at mirai-openai-plugin-1.5.0.mirai2.jar[private]//io.ktor.client.plugins.HttpCallValidator.access$validateResponse(HttpCallValidator.kt:40) at mirai-openai-plugin-1.5.0.mirai2.jar[private]//io.ktor.client.plugins.HttpCallValidator$Companion$install$3.invokeSuspend(HttpCallValidator.kt:148) at kotlin.coroutines.jvm.internal.BaseContinuationImpl.resumeWith(ContinuationImpl.kt:33) at mirai-openai-plugin-1.5.0.mirai2.jar[private]//io.ktor.util.pipeline.SuspendFunctionGun.resumeRootWith(SuspendFunctionGun.kt:138) at mirai-openai-plugin-1.5.0.mirai2.jar[private]//io.ktor.util.pipeline.SuspendFunctionGun.loop(SuspendFunctionGun.kt:112) at mirai-openai-plugin-1.5.0.mirai2.jar[private]//io.ktor.util.pipeline.SuspendFunctionGun.access$loop(SuspendFunctionGun.kt:14) at mirai-openai-plugin-1.5.0.mirai2.jar[private]//io.ktor.util.pipeline.SuspendFunctionGun$continuation$1.resumeWith(SuspendFunctionGun.kt:62) at kotlin.coroutines.jvm.internal.BaseContinuationImpl.resumeWith(ContinuationImpl.kt:46) at kotlinx.coroutines.DispatchedTask.run(DispatchedTask.kt:106) at kotlinx.coroutines.scheduling.CoroutineScheduler.runSafely(CoroutineScheduler.kt:570) at kotlinx.coroutines.scheduling.CoroutineScheduler$Worker.executeTask(CoroutineScheduler.kt:750) at kotlinx.coroutines.scheduling.CoroutineScheduler$Worker.runWorker(CoroutineScheduler.kt:677) at kotlinx.coroutines.scheduling.CoroutineScheduler$Worker.run(CoroutineScheduler.kt:664) -

@kouki 在 OpenAI ChatBot 插件,已添加预设功能(为啥你们都想整猫娘) 中说:

请问这样的报错是为何出现的,他出现就会强行stop,有没有办法让他继续或者保留之前的内容

2023-04-19 02:17:31 W/MiraiOpenAiListener: MiraiOpenAiListener with GroupMessageEvent(group=“所在群号”, senderName=“群聊昵称”, sender=“使用者QQ”, permission=OWNER, message=[mirai:source:ids=[114142], internalIds=[-“所在群号”], from group “使用者QQ” to “所在群号” at 1681840664]chat)xyz.cssxsh.openai.OpenAiException: This model's maximum context length is 4097 tokens.因为对话长度有上限

-

This post is deleted! -

This post is deleted! -

Referenced by A absdf15

-

大佬,之前因为用Lisa这个模型出现了一次超过最大token的报错情况,现在每次聊天一开始就报错

This model's maximum context length is 8192 tokens. However, you requested 8491 tokens (299 in the messages, 8192 in the completion). Please reduce the length of the messages or completion.

怎么重置呢,看来prompt里面也没有绑定预设 -

@Silverest 在 OpenAI ChatBot 插件,已添加预设功能(为啥你们都想整猫娘) 中说:

大佬,之前因为用Lisa这个模型出现了一次超过最大token的报错情况,现在每次聊天一开始就报错

This model's maximum context length is 8192 tokens. However, you requested 8491 tokens (299 in the messages, 8192 in the completion). Please reduce the length of the messages or completion.

怎么重置呢,看来prompt里面也没有绑定预设是因为我修改过chat.yml里面的max_token数值吗?之前改成了8192,然后试验发现数值为81xx就会一开始对话就如上这样报错,改成8099就不报错了

-

This post is deleted! -

您好,我在尝试更改语言模型时,在配置文件中将gpt-3.5-turbo分别改为gpt-4、gpt-4-32k-0314等进行尝试,得到的都是类似“OpenAI API 异常, The model:

gpt-4-32k-0314does not exist”的结果,请问是哪里出了问题? -

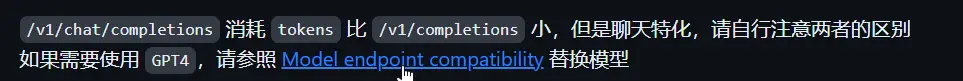

readme 有说

-

@cssxsh 我确实是按照readme那样更改的,但是效果并不理想

-

-

This post is deleted! -

@cssxsh 替换GPT-4的模型好像不行。gpt-4, gpt-4-0613, gpt-4-32k, gpt-4-32k-0613这几个都试过了。只需要该配置里面的Model吗?

-

? 描述问题应该描述你做了什么,而不是想做什么

-

@cssxsh 我修改了 chat.yml配置里面的gpt_model属性值为 gpt-4。但是在聊天时报错“gpt-4”does not exist