@第六神觉 检查一下权限和登录协议

南栀沁寒 发布的帖子

-

RE: ChatLearning——让bot学会你的群聊发布在 插件发布

请给艾特bot回复的功能上一个开关 因为 我的@是用于另外一个回复插件的 但是我想用新版的cosmatch功能 另外我问问如果词库非常大 开启cosmatch功能是否会让程序回复速度变得很慢

-

RE: [Mirai-NLP] GPT2-Chinese模型训练教程发布在 技术交流板块

@Mitr-yuzr ok了!期待后续 不知道后面是打算怎样将训练好的模型接入到bot上呢 触发条件是什么(@?还是说概率回复的那种)

-

RE: [Mirai-NLP] GPT2-Chinese模型训练教程发布在 技术交流板块

每一步都按部就班地完成了可是训练的时候只花了39秒就结束了好像么有训练上是什么情况 生成的文本也是默认的风格

2022-10-02 14:51:34.665015: W tensorflow/core/common_runtime/gpu/gpu_bfc_allocator.cc:39] Overriding allow_growth setting because the TF_FORCE_GPU_ALLOW_GROWTH environment variable is set. Original config value was 0.

args:

Namespace(batch_size=8, bpe_token=False, device='0,1,2,3', encoder_json='tokenizations/encoder.json', epochs=30, fp16=False, fp16_opt_level='O1', gradient_accumulation=1, log_step=1, lr=0.00015, max_grad_norm=1.0, min_length=128, model_config='/content/drive/MyDrive/mirai-gpt2/pretrain_model/config.json', num_pieces=31353, output_dir='/content/drive/MyDrive/mirai-gpt2/', pretrained_model='/content/drive/MyDrive/mirai-gpt2/pretrain_model', raw=True, raw_data_path='data/train.json', save_every=5, segment=False, start_epoch=0, stride=768, tokenized_data_path='data/tokenized/', tokenizer_path='/content/drive/MyDrive/mirai-gpt2/pretrain_model/vocab.txt', vocab_bpe='tokenizations/vocab.bpe', warmup_steps=2000, writer_dir='tensorboard_summary/')

config:

{

"activation_function": "gelu_new",

"architectures": [

"GPT2LMHeadModel"

],

"attn_pdrop": 0.1,

"embd_pdrop": 0.1,

"finetuning_task": null,

"gradient_checkpointing": false,

"initializer_range": 0.02,

"layer_norm_epsilon": 1e-05,

"model_type": "gpt2",

"n_ctx": 1024,

"n_embd": 768,

"n_head": 12,

"n_inner": null,

"n_layer": 6,

"n_positions": 1024,

"num_labels": 1,

"output_attentions": false,

"output_hidden_states": false,

"output_past": true,

"pruned_heads": {},

"resid_pdrop": 0.1,

"summary_activation": null,

"summary_first_dropout": 0.1,

"summary_proj_to_labels": true,

"summary_type": "cls_index",

"summary_use_proj": true,

"task_specific_params": {

"text-generation": {

"do_sample": true,

"max_length": 400

}

},

"tokenizer_class": "BertTokenizer",

"torchscript": false,

"use_bfloat16": false,

"vocab_size": 21128

}using device: cuda

building files

reading lines

100% 31353/31353 [00:01<00:00, 25351.73it/s]

finish

files built

number of parameters: 59541504

calculating total steps

100% 31353/31353 [00:00<00:00, 46655.81it/s]

total steps = 63

starting training

epoch 1

time: 2022-10-02 14:51:40.207784

epoch 1 finished

time: 2022-10-02 14:51:40.822857

time for one epoch: 0:00:00.615073

epoch 2

time: 2022-10-02 14:51:40.822894

epoch 2 finished

time: 2022-10-02 14:51:41.449992

time for one epoch: 0:00:00.627098

epoch 3

time: 2022-10-02 14:51:41.450048

epoch 3 finished

time: 2022-10-02 14:51:42.077769

time for one epoch: 0:00:00.627721

epoch 4

time: 2022-10-02 14:51:42.077812

epoch 4 finished

time: 2022-10-02 14:51:42.708398

time for one epoch: 0:00:00.630586

epoch 5

time: 2022-10-02 14:51:42.708441

saving model for epoch 5

epoch 5 finished

time: 2022-10-02 14:51:44.504427

time for one epoch: 0:00:01.795986

epoch 6

time: 2022-10-02 14:51:44.504479

epoch 6 finished

time: 2022-10-02 14:51:45.159980

time for one epoch: 0:00:00.655501

epoch 7

time: 2022-10-02 14:51:45.160022

epoch 7 finished

time: 2022-10-02 14:51:45.775230

time for one epoch: 0:00:00.615208

epoch 8

time: 2022-10-02 14:51:45.775269

epoch 8 finished

time: 2022-10-02 14:51:46.400834

time for one epoch: 0:00:00.625565

epoch 9

time: 2022-10-02 14:51:46.400874

epoch 9 finished

time: 2022-10-02 14:51:47.023521

time for one epoch: 0:00:00.622647

epoch 10

time: 2022-10-02 14:51:47.023573

saving model for epoch 10

epoch 10 finished

time: 2022-10-02 14:51:49.037504

time for one epoch: 0:00:02.013931

epoch 11

time: 2022-10-02 14:51:49.038147

epoch 11 finished

time: 2022-10-02 14:51:49.708357

time for one epoch: 0:00:00.670210

epoch 12

time: 2022-10-02 14:51:49.708414

epoch 12 finished

time: 2022-10-02 14:51:50.342309

time for one epoch: 0:00:00.633895

epoch 13

time: 2022-10-02 14:51:50.342346

epoch 13 finished

time: 2022-10-02 14:51:51.120483

time for one epoch: 0:00:00.778137

epoch 14

time: 2022-10-02 14:51:51.120527

epoch 14 finished

time: 2022-10-02 14:51:51.818518

time for one epoch: 0:00:00.697991

epoch 15

time: 2022-10-02 14:51:51.818555

saving model for epoch 15

epoch 15 finished

time: 2022-10-02 14:51:53.887909

time for one epoch: 0:00:02.069354

epoch 16

time: 2022-10-02 14:51:53.887966

epoch 16 finished

time: 2022-10-02 14:51:54.638956

time for one epoch: 0:00:00.750990

epoch 17

time: 2022-10-02 14:51:54.638997

epoch 17 finished

time: 2022-10-02 14:51:55.352443

time for one epoch: 0:00:00.713446

epoch 18

time: 2022-10-02 14:51:55.352490

epoch 18 finished

time: 2022-10-02 14:51:56.041411

time for one epoch: 0:00:00.688921

epoch 19

time: 2022-10-02 14:51:56.041461

epoch 19 finished

time: 2022-10-02 14:51:56.683434

time for one epoch: 0:00:00.641973

epoch 20

time: 2022-10-02 14:51:56.683474

saving model for epoch 20

epoch 20 finished

time: 2022-10-02 14:51:58.132586

time for one epoch: 0:00:01.449112

epoch 21

time: 2022-10-02 14:51:58.132646

epoch 21 finished

time: 2022-10-02 14:51:58.956908

time for one epoch: 0:00:00.824262

epoch 22

time: 2022-10-02 14:51:58.956947

epoch 22 finished

time: 2022-10-02 14:51:59.660956

time for one epoch: 0:00:00.704009

epoch 23

time: 2022-10-02 14:51:59.660999

epoch 23 finished

time: 2022-10-02 14:52:00.359305

time for one epoch: 0:00:00.698306

epoch 24

time: 2022-10-02 14:52:00.359344

epoch 24 finished

time: 2022-10-02 14:52:01.096733

time for one epoch: 0:00:00.737389

epoch 25

time: 2022-10-02 14:52:01.096784

saving model for epoch 25

epoch 25 finished

time: 2022-10-02 14:52:02.663749

time for one epoch: 0:00:01.566965

epoch 26

time: 2022-10-02 14:52:02.663813

epoch 26 finished

time: 2022-10-02 14:52:03.444945

time for one epoch: 0:00:00.781132

epoch 27

time: 2022-10-02 14:52:03.444996

epoch 27 finished

time: 2022-10-02 14:52:04.191383

time for one epoch: 0:00:00.746387

epoch 28

time: 2022-10-02 14:52:04.191432

epoch 28 finished

time: 2022-10-02 14:52:04.841133

time for one epoch: 0:00:00.649701

epoch 29

time: 2022-10-02 14:52:04.841188

epoch 29 finished

time: 2022-10-02 14:52:05.454232

time for one epoch: 0:00:00.613044

epoch 30

time: 2022-10-02 14:52:05.454272

saving model for epoch 30

epoch 30 finished

time: 2022-10-02 14:52:06.953251

time for one epoch: 0:00:01.498979

training finished -

RE: NLPHelper - 自然语言处理模型训练数据采集专用插件发布在 插件发布

可不可以实现对指定的对象说的话进行过滤 不收集这个人发的句子

或者导出时过滤掉关键词

/NLPHelper exportBySQL "SELECT * FROM NLPH WHERE content NOT LIKE '%妈%';" gpt2

这个句子如果想添加多个过滤词该怎么写?

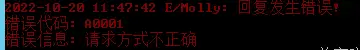

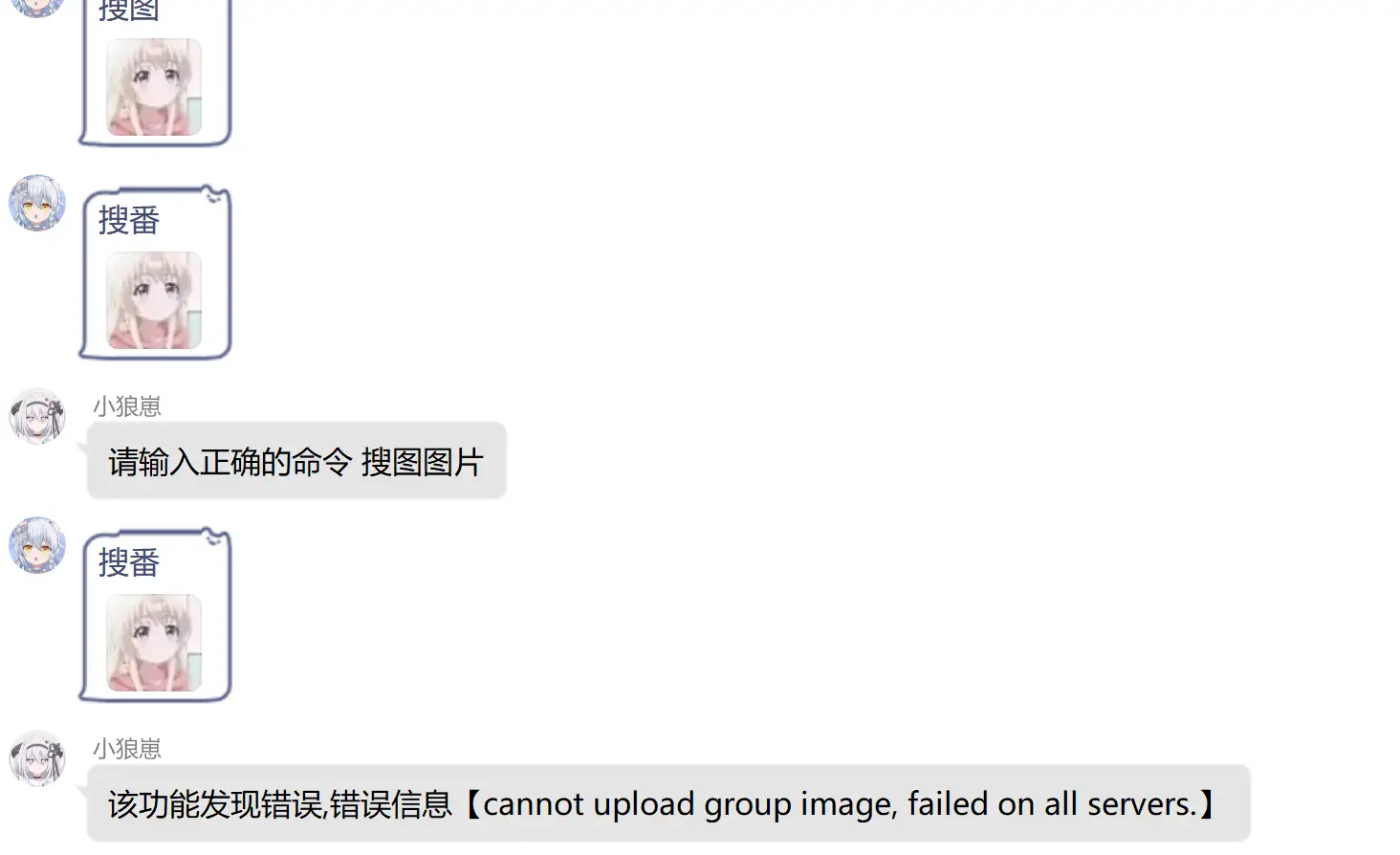

你好 请问这是什么情况 之前可以用 今天突然用不了了

你好 请问这是什么情况 之前可以用 今天突然用不了了